For this purpose we represent the observed skeleton poses (expressed in the camera reference frame) using a spatio-temporal tensor and formulate the prediction problem as an inpainting task, in which a part of the spatio-temporal volume needs to be regressed. Specifically, we design a novel GAN architecture that is conditioned on past observations and is able to jointly forecast non-rigid body pose and its absolute position in space. In this paper we tackle all three issues.

To our understanding, this practice compromises the progress in this field. geodesic loss), the L2 distance is still being used as a common practice when benchmarking different methodologies. Even though this issue has been raised in and is partially solved during training using other metrics (e.g. In particular, the use of this metric to train a deep network favors motion predictions that converge to a static mean pose. The L2 distance, however, is known to be an inaccurate metric, specially to compare long motion sequences. And third, most approaches aim to minimize the L2 distance between the ground truth and generated motions. Second, most approaches require additional supervision in terms of action labels both during training and inference, which limits the applicability of these approaches to general motion sequences which do not correspond to the observed actions in the training data. First, all approaches address a simplified version of the problem in which global body positioning is disregarded, either by parameterizing 3D body joints in terms of position agnostic angles or in terms of body centered Cartesian coordinates. While the results of these works are very promising, they suffer from three fundamental limitations. State-of-the-art approaches formulate the problem as a sequence generation task, and solve it using Recurrent Neural Networks (RNNs), sequence-to-sequence models or encoder-decoder predictors. Recent advances in motion capture (MoCap) technologies, combined with large scale datasets such as Human3.6M, have spurred the interest for new deep learning algorithms able to forecast 3D human motion from past skeleton data. Note that the generated motion is somewhat different but semantically indistinguishable from the ground truth. The predicted sequence starts from the skeleton marked in black.

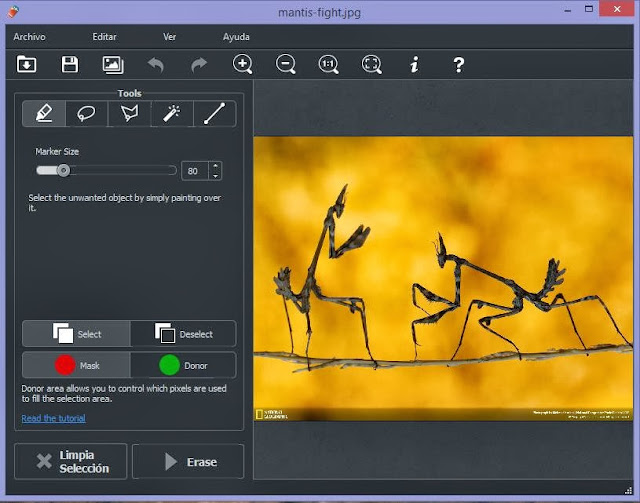

#Inpaint online 7.2 full

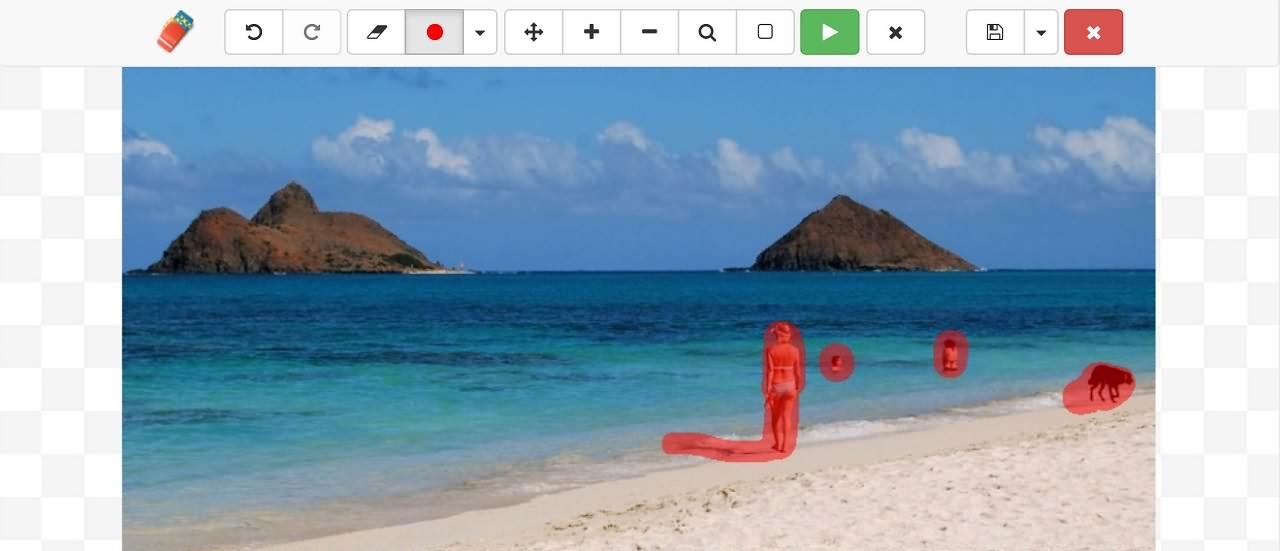

Our approach is the first in generating full body pose, including skeleton motion and absolute position in space. 1 Introduction Ground Truthįigure 1: Example result. Our experiments demonstrate that our approach achieves significant improvements over the state of the art for human motion forecasting and that it also handles situations in which past observations are corrupted by severe occlusions, noise and consecutive missing frames. We therefore propose an alternative metric that is more correlated with human perception. And finally, we argue that the L2 metric, which is considered so far by most approaches, fails to capture the actual distribution of long-term human motion. Secondly, we design a GAN architecture to learn the joint distribution of body poses and global motion, allowing us to hypothesize large chunks of the input 3D tensor with missing data. First, we consider a data representation based on a spatio-temporal tensor of 3D skeleton coordinates which allows us to formulate the prediction problem as an inpainting one, for which GANs work particularly well. Our approach builds upon three main contributions. The GAN scheme we propose can reliably provide long term predictions of two seconds or more for both the non-rigid body pose and its absolute position, and can be trained in an self-supervised manner.

a few hundred milliseconds, and typically ignore the absolute position of the skeleton w.r.t.

While recent GANs have shown promising results, they can only forecast plausible human-like motion over relatively short periods of time, i.e. We propose a Generative Adversarial Network (GAN) to forecast 3D human motion given a sequence of observed 3D skeleton poses.

0 kommentar(er)

0 kommentar(er)